Practical Applications of Peer-to-Peer Networks in File Sharing and Content Distribution

This blog post will cover:

- Understanding Peer-to-Peer Networks

- Peer-to-Peer File Sharing

- P2P Networks in Content Distribution

- Conclusion

People's propensity to establish decentralized groups is always a reaction to the strain or shortcomings of centralized systems.

Such associations of ostensibly weaker rank-and-file members (in comparison to well-organized and centrally managed organizations) provide an effective and relatively long-lasting means for them to resist or assert their rights; a distributed network builds up its members' resources and can withstand their loss.

In the era of computers, decentralized networks are a form of self-organization with shared objectives, methods, and outcomes. Every member in a peer-to-peer network is on an equal footing. In a peer-to-peer network, every computer can function as both a data source and a data consumer. P2P networks connect individual devices logically, that is, by using extra sets of rules, "on top" of traditional networks like the Internet. Peer-to-peer networks are designed to be interconnected so that all users can take advantage of resource distribution and the network as a whole can withstand the loss of any of its individual nodes.

We'll examine peer-to-peer networks' significance as well as their useful applications in content distribution and file sharing today.

Understanding Peer-to-Peer Networks

The traditional architecture of computer networks mandates the inclusion of servers, which are higher-ranking members tasked with organizing the entire structure, and clients, which are regular members with the only authority to request things from servers, get responses from them, and carry out commands.

Peer-to-peer networks are built on the idea that equal peer nodes serve as both "clients" and "servers" to other nodes on the network at the same time. Peer-to-peer networks connect computers that can talk to each other and respond to other members of the network. Programs, or clients, are the units of execution that carry out the rules necessary for the functioning of such a system. These clients are run concurrently at every member of the network. The guidelines themselves are made to accommodate for each member's potential for failure. Collaboratively confirming each member's actions within the network guarantees its stability and resilience against potential takeover attempts.

Types of Peer-to-Peer Networks

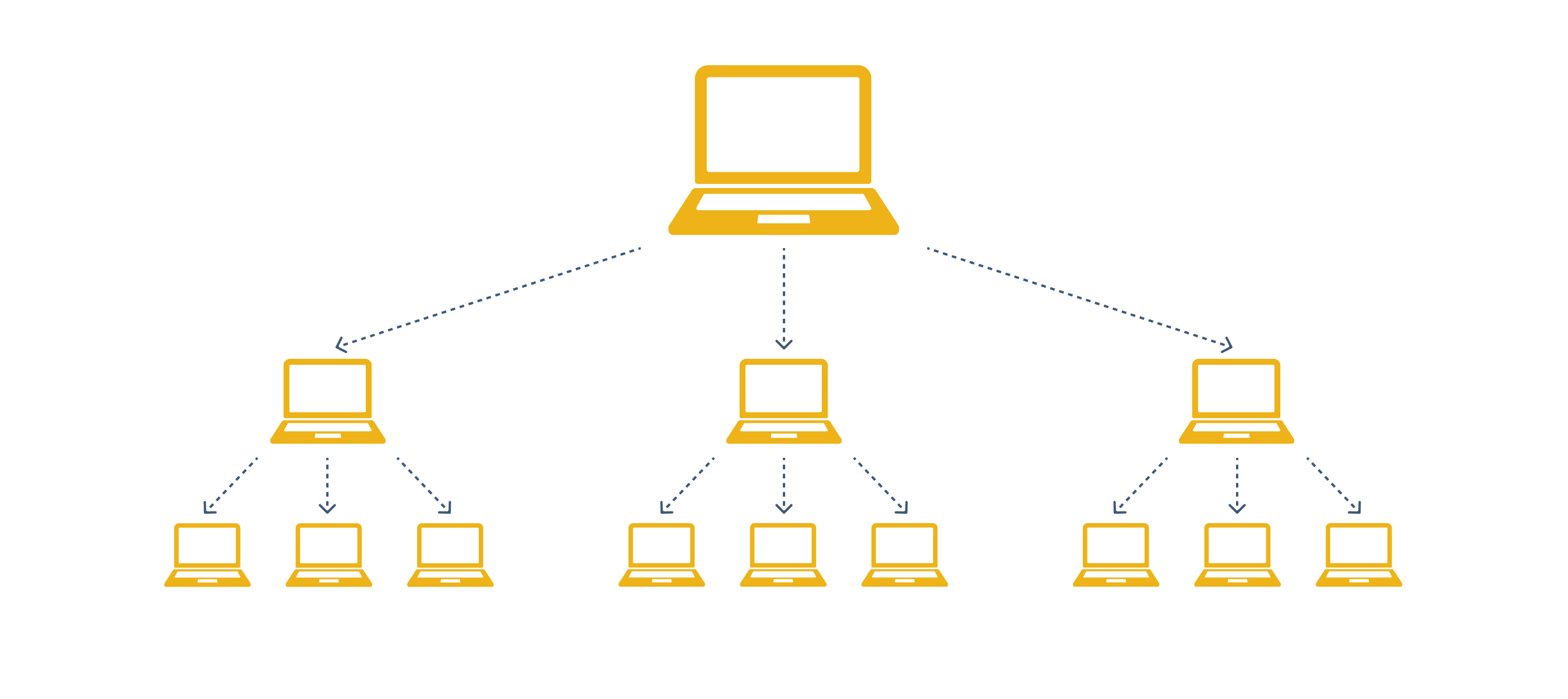

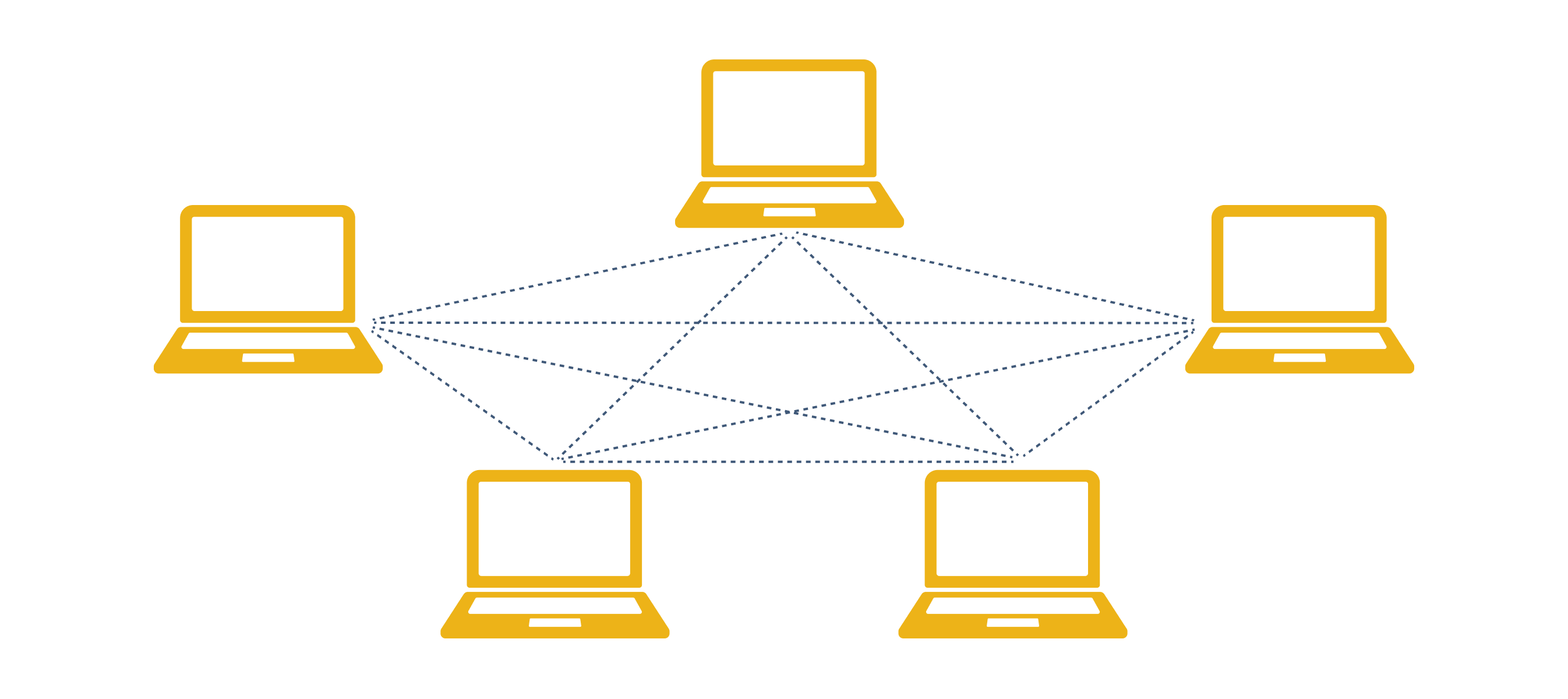

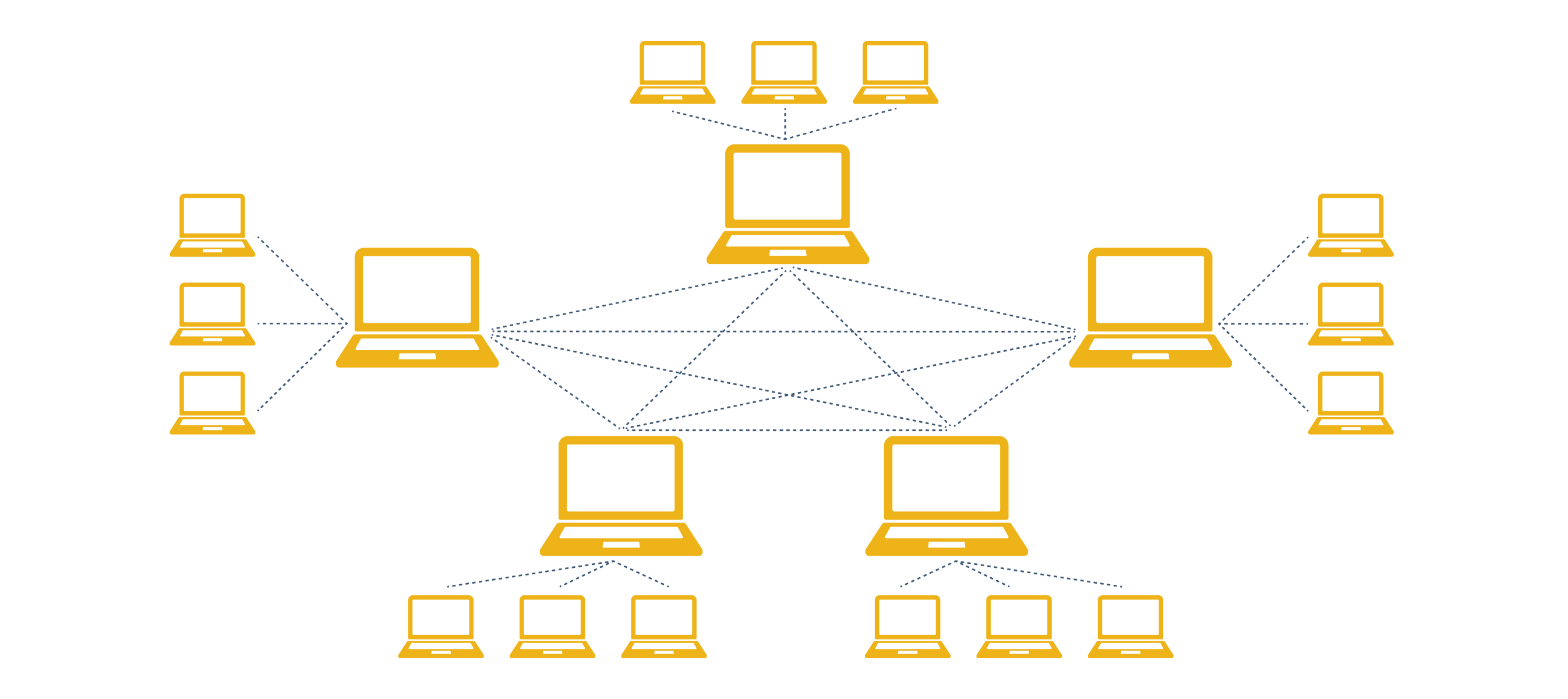

Peer-to-peer networks using P2P architecture fall into three categories: hybrid, unstructured, and structured.

Structured peer-to-peer networks

Nodes in these networks may accurately search for files even when their contents are inaccessible because they operate within a structured framework. Because of the ordered framework, structured P2P networks exhibit some degree of centralization. Although they provide easy access to data, they need more setup work than unstructured peer-to-peer networks.

Unorganized peer-to-peer systems

There is no fixed node structure in such a network, so users are free to join and leave whenever they like. Owing to the absence of a clear framework, individuals speak with one another at random. They need a lot of processing power to function properly because all nodes must be active in order to complete a lot of transactions.

Peer-to-peer hybrid networks

This kind of P2P network blends elements of the conventional client-server methodology with P2P design. For instance, it enables the use of a central server to locate a node. Client-server architecture, a distributed structure for network applications, assigns duties to clients and servers inside the same system that interact via the Internet or a computer network.

History of Peer-to-Peer Networks

Peer-to-peer projects gained popularity in the early 2000s among large consumers. Information distribution opportunities increased with the advent of computers and networks, but content sellers and copyright holders were pleased with the status quo and did not see a need to take use of these advancements.

As a result, distributed file-sharing programs started to emerge, allowing users to freely share books, movies, and music between computers connected to a shared network. These networks and initiatives include BitTorrent, DC, Kazaa, eDonkey2000, and Napster.

It was the era of the huge conflict that existed between peer-to-peer networks and copyright holders. Record, CD, and paper book vendors, tucked away in their brick-and-mortar businesses, didn't accept P2P in stride; they felt like money was evaporating from their grasp like sand, but they were unwilling to let that alter.

Arrests, bans, fines, and lawsuits followed. Numerous initiatives have come to an end, some have entered the "gray" area, and some have shown no indications of life at all. It should be highlighted, nevertheless, that utilizing computer technology to its full potential rather than outright prohibiting it was still the greatest and most effective strategy for growing the rights holders' company.

Peer-to-Peer Networks and Anonymity

Information communication has never been easier because of the development of computers and networks, and knowledge is the foundation of authority, control, and power. Restricted access to resources is the foundation of centralized organizations. According to the hierarchy, certain members are privileged and should have access to resources, while other members are considered ordinary and should be satisfied with what they are given by the former.

Anonymized P2P projects and technologies like TOR, Freenet, and L2P emerged as a result of this conflict between the desire of states and corporations to limit these opportunities and the opportunities for information exchange provided by modern technologies.

Participant avoidance of discovery and identification of a specific individual utilizing the network to supply others or collect information is the primary objective of such programs. Multiple encryption, masking communication sources and recipients, imitating other protocols and technologies, and creating multi-stage, untraceable paths are just a few of the intricate and sometimes perplexing technologies employed to achieve this goal.

Advantages of P2P Networks

Here are some of the pros of such networks.

Easy configuration and installation of P2P networks;

All users share resources and content, unlike client-server architecture, where only the server can distribute resources and provide users with information;

Much greater reliability - failure or disconnection of one node does not affect the entire network;

No need for a network administrator, as all nodes are administrators themselves;

The cost of building and operating such a network is relatively low.

Challenges and Limitations of P2P Networks

Peer-to-peer networks include drawbacks in addition to these benefits. Any virus or malware can spread from an infected node to all participating nodes because there isn't a single server. In a similar vein, since the system is decentralized, nodes are free to disseminate copyrighted content.

P2P networks frequently contain a sizable user base that uses resources that are shared by other nodes while keeping their own resources private. These "leeches"—freeloader nodes—have the ability to encourage immoral and unethical behavior.

Peer-to-Peer File Sharing

The emergence of Napster in 1999, a trailblazing platform that enabled users to share MP3 files directly with one another, signaled the beginning of P2P file sharing. By eschewing conventional distribution methods and facilitating a decentralized exchange of music files, Napster's strategy was groundbreaking. Nevertheless, Napster's centralized indexing mechanism ultimately contributed to its demise because it was the focus of multiple lawsuits alleging copyright infringement, which finally resulted in its closure in 2001.

The demise of Napster made room for the development of more complex P2P networks, like BitTorrent. BitTorrent, which Bram Cohen founded in 2001, brought a more effective way to share files. BitTorrent, in contrast to Napster, decentralized file distribution by slicing files into smaller chunks and distributing them across several users at once. This strategy improved download speeds while lessening the pressure on any one server, strengthening the system's resilience and scalability.

How P2P File Sharing Works

P2P file sharing comprises a number of essential processes that guarantee effective and decentralized distribution. A file is first divided into smaller sections, known as chunks or pieces. You can download each of these components separately from peers who have either the entire file or only parts of it. Multiple peers can upload and download separate portions of the file at the same time using this distribution mechanism, greatly accelerating the process.

An essential part of arranging this communication is the use of trackers. A tracker is a type of server that facilitates peer connection management. It tracks which peers possess what parts of the file and makes the sharing easier by connecting peers with one another. Users who possess the entire file and distribute it to others are known as seeders. On the other hand, those who are downloading the file are known as leechers. A leecher can turn into a seeder and improve the general performance and health of the network after obtaining the complete content.

Case Studies of Popular P2P File Sharing Platforms

BitTorrent's creative methodology and extensive usage have made it synonymous with P2P file sharing. Its technology significantly speeds up downloads and lowers server loads by dividing huge files into smaller chunks and distributing them among several users. BitTorrent's influence goes beyond individual file sharing; businesses now employ it to effectively distribute big files, such game patches and software upgrades. BitTorrent has come under fire and legal hurdles despite its legitimate usage because of its link to the unauthorized distribution of copyrighted content.

Other well-known P2P file-sharing programs that surfaced in the early 2000s include eDonkey and Gnutella. Jed McCaleb's eDonkey2000 system used a hybrid approach that combined aspects of decentralized and centralized systems. Users liked it because it made for effective downloads and thorough file searches. In contrast, Gnutella lacked a central server and was totally decentralized. Gnutella, created by Justin Frankel and Tom Pepper, presented difficulties for network performance and search capabilities due to its totally distributed architecture, which made it resistant to shutdowns.

P2P Networks in Content Distribution

P2P networks revolutionize content distribution, media streaming, software distribution, and data backup and storage.

P2P in Media Streaming

Media streaming in conventional client-server setups depends on centralized servers to provide users with material; this can result in bottlenecks, expensive bandwidth, and single points of failure. P2P networks, on the other hand, decentralize this procedure, spreading the burden among several users and improving dependability and efficiency.

P2P-based media streaming allows content to be shared with other users on each user's device in addition to being consumed. Faster streaming rates and better scalability are achieved by utilizing the combined bandwidth of all participating devices, which significantly lessens the load on any one server.

Popcorn Time is a well-known illustration of a P2P-based streaming service. Popcorn Time streams video straight from torrents using the BitTorrent protocol. This implies that users' devices concurrently upload portions of the content to other users while they watch a movie or TV show. With this decentralized method, expensive server infrastructure is not required for high-quality streaming. Popcorn Time has encountered legal issues despite its cutting-edge technology since it makes it easier for unauthorized users to obtain information that is protected by copyright.

P2P in Software Distribution

P2P networks are also frequently utilized for the large-scale distribution of software applications and upgrades. Conventional distribution techniques can be costly and time-consuming, particularly when handling big files or a lot of downloads. By dividing the load among several peers, P2P technology solves these problems and improves process efficiency and economy.

P2P technology is used by top video game producer Blizzard Entertainment to share updates for its well-liked titles. When Blizzard releases a big update for a game like World of Warcraft, for instance, each player's computer assists in spreading the update to other players. This is known as a peer-to-peer method. This not only expedites the download process but also lowers Blizzard's bandwidth expenses because the load is distributed among the users' computers.

Microsoft is another noteworthy example, since they have integrated P2P technology into their Windows Update distribution mechanism. Windows users can download updates more quickly by turning on P2P distribution, which allows their devices to download updates partially from other users' devices in addition to Microsoft's servers.

P2P in Data Backup and Storage

Conventional cloud storage solutions depend on centrally located servers, which are susceptible to various hazards such as server malfunctions and data breaches. P2P storage platforms, on the other hand, disperse data over several nodes, guaranteeing that the system cannot be compromised by a single point of failure.

Two well-known P2P storage systems are Storj and the InterPlanetary File System (IPFS). Storj offers decentralized cloud storage by utilizing P2P networks and blockchain technologies. This technology distributes data over a global network of nodes after encrypting it and breaking it up into smaller parts. High levels of security and redundancy are ensured by allowing users to reassemble the pieces from several nodes to get their data.

IPFS, on the other hand, uses a P2P network for file sharing and storage with the goal of establishing a decentralized web. A file is divided into smaller parts, hashed, and shared among several nodes when it is added to IPFS. Because each chunk is identified by a distinct cryptographic hash, finding and retrieving the data is made simple. The decentralized nature of IPFS improves data availability because files are accessible from several sources, even in the event that some nodes go down.

Conclusion

P2P networks, which offer decentralized, practical, and economical solutions, have fundamentally altered the way that content is delivered. Their versatility and significance in modern technology are demonstrated by their applications in media streaming, software distribution, and data storage. We must continue to create and use P2P networks properly if we are to fully realize their promise and encourage a more resilient and connected digital environment.

SimpleSwap reminds you that this article is provided for informational purposes only and does not provide investment advice. All purchases and cryptocurrency investments are your own responsibility.